Adventures in building custom datasets via Web Scrapping — ESPN Articles Edition

I recently decided that I wanted to build a Named-Entity Recognition (NER) model geared towards sports, preferably the NBA. I lacked actual data and did not want to use any existing datasets. So I homed in on ESPN as my preferred data source. The site has lots of writers, stories and they provide RSS feeds aggregated by sport.

ie: https://www.espn.com/espn/rss/nba/newsProcedure

- Call the RSS feed and take the article urls out of the response.

- Using those urls, pull down the article from the website.

- Parse out the text from the HTML response.

For the next few sections, I will be using a simple requestor/parser pattern, where a requestor takes in a parser through its constructor. Responses from the requestor flow through the parser and the output is pushed back out. This setup may seem like overkill, but it definitely makes it easier when you want to add in other RSS Feeds. ie: medium, cbssports, etc… It turns into a plug and play situation.

** The link to the full jupyter notebook is available at the end of the post.

Classes for Getting Articles from the RSS Feed

AbstractRequestor:

from abc import ABC, abstractmethodclass AbstractRequestor(ABC):

@abstractmethod

def run(self, url: str) -> dict:

pass

RssRequestor — Makes calls to the RSS Feed

import feedparserclass RssRequestor(AbstractRequestor):

parser: RssParser

def __init__(self, parser: RssParser):

self.parser = parser def run(self, url: str) -> dict:

feed = feedparser.parse(url)

return self.parser.run(feed)

- Calls the feed and passes a JSON response to an ‘RssParser’.

Class for Parsing the RSS Feed Response

RssParser:

class RssParser(object): def run(self, feed: dict) -> dict:

return {

'title': feed.feed.title,

'links': [ entry.link for entry in feed.entries ]

}

- Receives a JSON response and pulls out a link from each entry.

Class for Requesting Articles

WebsiteRequestor — Makes calls to a Website to get the HTML

import requestsclass WebsiteRequestor(AbstractRequestor):

parser: AbstractWebsiteParser

def __init__(self, parser: AbstractWebsiteParser):

self.parser = parser def run(self, url: str) -> dict:

response = requests.get(url)

assert response.status_code == 200, \

f'status code: {response.status_code}' return self.parser.run(response.text)

- Makes a request and passes the response to an ‘AbstractWebsiteParser’. This allows us to setup different website parsers. ie: espn, cbssports, cnn, etc…

Classes for Parsing Articles

AbstractWebsiteParser:

class AbstractWebsiteParser(ABC):

@abstractmethod

def run(self, html: str) -> dict:

passEspnWebsiteParser — Purpose is to parse an ESPN Article

from bs4 import BeautifulSoupclass EspnWebsiteParser(AbstractWebsiteParser):

def run(self, html: str) -> dict:

bs = BeautifulSoup(html, 'html.parser') elements_to_remove = [

bs.find_all('ul', 'article-social'),

bs.find_all('div', 'article-meta'),

bs.find_all('aside'),

bs.find_all('div', 'teads-inread'),

bs.find_all('figure'),

bs.find_all('div', 'cookie-overlay')

] for element_search in elements_to_remove:

for tag in element_search:

tag.decompose() for a in bs.find_all('a'):

a.replaceWith(a.text) p = [ p.text for p in bs.find_all('p') ] return {

'text': '\n'.join(p).strip()

}

- attempting to just remove enough junk.

- returning the concatenated text from each paragraph.

Putting it Altogether

Runner:

import time

from typing import Iteratorclass Runner(object):

rss_requestor: AbstractRequestor

website_requestor: AbstractRequestor

sleep_time_in_seconds: int

def __init__(self, \

rss_requestor: AbstractRequestor, \

website_requestor: AbstractRequestor, \

sleep_time_in_seconds = 30):

self.rss_requestor = rss_requestor

self.website_requestor = website_requestor

self.sleep_time_in_seconds = sleep_time_in_seconds

def run(self, url: str) -> Iterator[tuple]:

feed = self.rss_requestor.run(url)

for link in feed['links']:

response = self.website_requestor.run(link)

text = response['text']

yield (link, text)

time.sleep(self.sleep_time_in_seconds)

- Takes in our previously built requestors.

- Returns a tuple with the link and parsed content.

- A major point with the run method is to sleeping after the request. Seriously, be nice!

Final Setup — Runs and saves off the content

import reoutput_directory = '../data/espn/nba/documents'url = 'https://www.espn.com/espn/rss/nba/news'

rss_requestor = RssRequestor(RssParser())

website_requestor = WebsiteRequestor(EspnWebsiteParser())

runner = Runner(rss_requestor, website_requestor)for link, text in runner.run(url):

story_id_search = re.compile( \

r'\/(?:id|page)\/([^/]+)\/').search(link) assert story_id_search != None, f'error: {link}'

story_id = story_id_search.group(1)

article_path = f'{output_directory}/{story_id}.txt'

with open(article_path, 'w') as output:

output.write(text)

print(f'finished: {article_path}')

- Each run will overwrite an article if it was previously parsed. These articles are often updated, espically if the story is fluid, so this made sense for me.

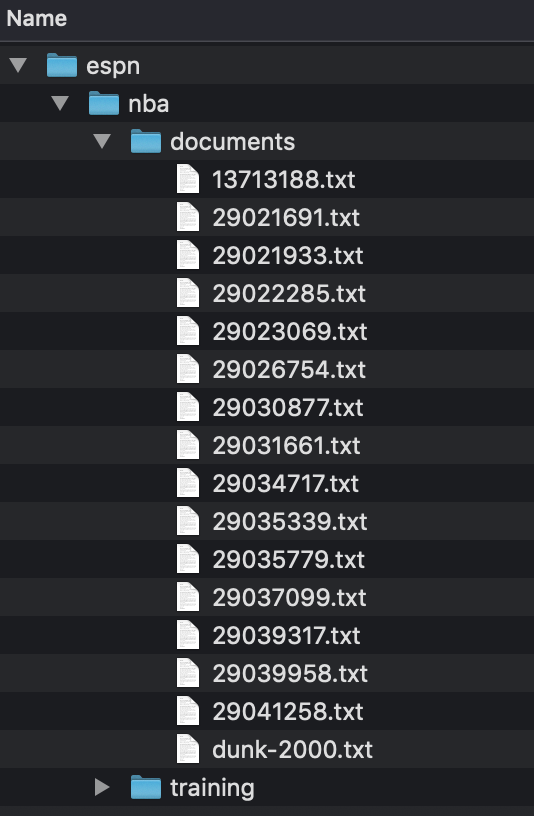

- Using regex to pull out the story id from the link. I am using this as the name of the file.

- We can now run this periodically or set it up as a task that runs automatically, ie: luigi. Whatever works best for you.

- This could also be changed to push out to a csv. I am going to be building out the training/testing datasets per story. So keeping them separate, works best for me.

Results — Let there be data!